How to Log Your Data with MLflow

MLflow, MLOps, Data ScienceMastering data logging in MLOps for your AI workflowPhoto by Chris Liverani on UnsplashPrefaceData is one of the most critical components of the machine learning process. In fact, the quality of the data used in training a model often determines the success or failure of the entire project. While algorithms and models are important, they are powerless without data that is accurate, clean, and representative of the problem you’re trying to solve. Whether you are dealing with structured data, unstructured data, or large-scale datasets, the preparation and understanding of data lay the foundation for any machine learning system. A well-curated dataset can provide the necessary signals for a model to learn effectively, while poor or biased data can lead to incorrect predictions, overfitting, or even harmful societal impacts when models are deployed in real-world applications.With the increasing complexity of machine learning workflows, ensuring the reproducibility and traceability of experiments has become a key concern. This is where MLOps (Machine Learning Operations) comes into play. MLOps is the practice of bringing together data science and operations to automate and streamline machine learning workflows. One critical aspect of MLOps is the tracking of datasets throughout the entire lifecycle of a machine learning project. Tracking is not just about capturing the parameters and metrics of models; it’s equally important to log the datasets used during each phase of the process. This ensures that, down the line, when models are re-evaluated or retrained, the same datasets can be referenced, tested, or reused. It allows for better comparison of results, understanding of changes, and most importantly, ensuring that the results are reproducible by others. As a best practice for modern ML systems, I recommend that all ML practitioners worldwide log their data during training.Data Yesterday vs. Data Today?In this context, MLflow plays a pivotal role by offering a suite of tools that help streamline these MLOps practices. One of the key components of MLflow is its mlflow.data module, which provides the ability to log datasets as part of machine learning experiments. The mlflow.data module ensures that datasets are properly documented, their metadata is tracked, and they can be retrieved and reused in future runs. This helps prevent common problems like "data drift" where models start to perform worse because of subtle changes in the underlying data. MLflow’s ability to track datasets alongside models and parameters ensures that any new experiment can reliably compare results against previous runs using the exact same data, providing transparency and clarity.Photo by Brian McGowan on UnsplashOverviewThis article will guide you through the best practices of logging a dataset in MLflow using the California Housing Dataset as an example. We’ll explore mlflow.data interfaces and demonstrate how you can track and manage your dataset during an ML experiment.Imagine you are a data scientist working on a project to predict housing prices in California based on various features such as median income, population, and location. You’ve spent hours curating a dataset from multiple sources, cleaning it, and ensuring it’s ready for training. Now you’re ready to run your machine learning experiments. Logging your dataset at this stage is crucial because it serves as a snapshot of the exact data used in this specific training run. Should you need to revisit the experiment months later — perhaps to improve the model, tune hyperparameters, or audit the results — you want to ensure that you are using the same dataset to maintain consistency and comparability. Without logging your dataset, it’s easy to lose track of which version of the data was used, especially if the data is updated or changed over time.Without further ado, let’s get started!Set UpSetting up an MLflow server locally is straightforward. Use the following command:mlflow server --host 127.0.0.1 --port 8080Then set the tracking URI.mlflow.set_tracking_uri("http://127.0.0.1:8080")For more advanced configurations, refer to the MLflow documentation.The California housing datasetPhoto by Robert Bye on UnsplashFor this article, we are using the California housing dataset (CC BY license). However, you can apply the same principles to log and track any dataset of your choice.For more information on the California housing dataset, refer to this doc.Dataset and DatasetSourcemlflow.data.dataset.DatasetBefore diving into dataset logging, evaluation, and retrieval, it’s important to understand the concept of datasets in MLflow. MLflow provides the mlflow.data.dataset.Dataset object, which represents datasets used in with MLflow Tracking.class mlflow.data.dataset.Dataset(source: mlflow.data.dataset_source.DatasetSource, name: Optional[str] = None, digest: Optional[str] = None)This object comes with key properties:A required parameter, source (the data sourc

MLflow, MLOps, Data Science

Mastering data logging in MLOps for your AI workflow

Preface

Data is one of the most critical components of the machine learning process. In fact, the quality of the data used in training a model often determines the success or failure of the entire project. While algorithms and models are important, they are powerless without data that is accurate, clean, and representative of the problem you’re trying to solve. Whether you are dealing with structured data, unstructured data, or large-scale datasets, the preparation and understanding of data lay the foundation for any machine learning system. A well-curated dataset can provide the necessary signals for a model to learn effectively, while poor or biased data can lead to incorrect predictions, overfitting, or even harmful societal impacts when models are deployed in real-world applications.

With the increasing complexity of machine learning workflows, ensuring the reproducibility and traceability of experiments has become a key concern. This is where MLOps (Machine Learning Operations) comes into play. MLOps is the practice of bringing together data science and operations to automate and streamline machine learning workflows. One critical aspect of MLOps is the tracking of datasets throughout the entire lifecycle of a machine learning project. Tracking is not just about capturing the parameters and metrics of models; it’s equally important to log the datasets used during each phase of the process. This ensures that, down the line, when models are re-evaluated or retrained, the same datasets can be referenced, tested, or reused. It allows for better comparison of results, understanding of changes, and most importantly, ensuring that the results are reproducible by others. As a best practice for modern ML systems, I recommend that all ML practitioners worldwide log their data during training.

Data Yesterday vs. Data Today?

In this context, MLflow plays a pivotal role by offering a suite of tools that help streamline these MLOps practices. One of the key components of MLflow is its mlflow.data module, which provides the ability to log datasets as part of machine learning experiments. The mlflow.data module ensures that datasets are properly documented, their metadata is tracked, and they can be retrieved and reused in future runs. This helps prevent common problems like "data drift" where models start to perform worse because of subtle changes in the underlying data. MLflow’s ability to track datasets alongside models and parameters ensures that any new experiment can reliably compare results against previous runs using the exact same data, providing transparency and clarity.

Overview

This article will guide you through the best practices of logging a dataset in MLflow using the California Housing Dataset as an example. We’ll explore mlflow.data interfaces and demonstrate how you can track and manage your dataset during an ML experiment.

Imagine you are a data scientist working on a project to predict housing prices in California based on various features such as median income, population, and location. You’ve spent hours curating a dataset from multiple sources, cleaning it, and ensuring it’s ready for training. Now you’re ready to run your machine learning experiments. Logging your dataset at this stage is crucial because it serves as a snapshot of the exact data used in this specific training run. Should you need to revisit the experiment months later — perhaps to improve the model, tune hyperparameters, or audit the results — you want to ensure that you are using the same dataset to maintain consistency and comparability. Without logging your dataset, it’s easy to lose track of which version of the data was used, especially if the data is updated or changed over time.

Without further ado, let’s get started!

Set Up

Setting up an MLflow server locally is straightforward. Use the following command:

mlflow server --host 127.0.0.1 --port 8080

Then set the tracking URI.

mlflow.set_tracking_uri("http://127.0.0.1:8080")For more advanced configurations, refer to the MLflow documentation.

The California housing dataset

For this article, we are using the California housing dataset (CC BY license). However, you can apply the same principles to log and track any dataset of your choice.

For more information on the California housing dataset, refer to this doc.

Dataset and DatasetSource

mlflow.data.dataset.Dataset

Before diving into dataset logging, evaluation, and retrieval, it’s important to understand the concept of datasets in MLflow. MLflow provides the mlflow.data.dataset.Dataset object, which represents datasets used in with MLflow Tracking.

class mlflow.data.dataset.Dataset(source: mlflow.data.dataset_source.DatasetSource, name: Optional[str] = None, digest: Optional[str] = None)

This object comes with key properties:

- A required parameter, source (the data source of your dataset as mlflow.data.dataset_source.DatasetSource object)

- digest (fingerprint for your dataset) and name (name for your dataset), which can be set via parameters.

- schema and profile to describe the dataset’s structure and statistical properties.

- Information about the dataset’s source, such as its storage location.

You can easily convert the dataset into a dictionary using to_dict() or a JSON string using to_json().

Support for Popular Dataset Formats

MLflow makes it easy to work with various types of datasets through specialized classes that extend the core mlflow.data.dataset.Dataset. At the time of writing this article, here are some of the notable dataset classes supported by MLflow:

- pandas: mlflow.data.pandas_dataset.PandasDataset

- NumPy: mlflow.data.numpy_dataset.NumpyDataset

- Spark: mlflow.data.spark_dataset.SparkDataset

- Hugging Face: mlflow.data.huggingface_dataset.HuggingFaceDataset

- TensorFlow: mlflow.data.tensorflow_dataset.TensorFlowDataset

- Evaluation Datasets: mlflow.data.evaluation_dataset.EvaluationDataset

All these classes come with a convenient mlflow.data.from_* API for loading datasets directly into MLflow. This makes it easy to construct and manage datasets, regardless of their underlying format.

mlflow.data.dataset_source.DatasetSource

The mlflow.data.dataset.DatasetSource class is used to represent the origin of the dataset in MLflow. When creating a mlflow.data.dataset.Dataset object, the source parameter can be specified either as a string (e.g., a file path or URL) or as an instance of the mlflow.data.dataset.DatasetSource class.

class mlflow.data.dataset_source.DatasetSource

If a string is provided as the source, MLflow internally calls the resolve_dataset_source function. This function iterates through a predefined list of data sources and DatasetSource classes to determine the most appropriate source type. However, MLflow's ability to accurately resolve the dataset's source is limited, especially when the candidate_sources argument (a list of potential sources) is set to None, which is the default.

In cases where the DatasetSource class cannot resolve the raw source, an MLflow exception is raised. For best practices, I recommend explicitly create and use an instance of the mlflow.data.dataset.DatasetSource class when defining the dataset's origin.

- class HTTPDatasetSource(DatasetSource)

- class DeltaDatasetSource(DatasetSource)

- class FileSystemDatasetSource(DatasetSource)

- class HuggingFaceDatasetSource(DatasetSource)

- class SparkDatasetSource(DatasetSource)

Get an email whenever Jack Chang publishes.

Logging datasets with mlflow.log_input() API

One of the most straightforward ways to log datasets in MLflow is through the mlflow.log_input() API. This allows you to log datasets in any format that is compatible with mlflow.data.dataset.Dataset, which can be extremely helpful when managing large-scale experiments.

Step-by-Step Guide

First, let’s fetch the California Housing dataset and convert it into a pandas.DataFrame for easier manipulation. Here, we create a dataframe that combines both the feature data (california_data) and the target data (california_target).

california_housing = fetch_california_housing()

california_data: pd.DataFrame = pd.DataFrame(california_housing.data, columns=california_housing.feature_names)

california_target: pd.DataFrame = pd.DataFrame(california_housing.target, columns=['Target'])

california_housing_df: pd.DataFrame = pd.concat([california_data, california_target], axis=1)

To log the dataset with meaningful metadata, we define a few parameters like the data source URL, dataset name, and target column. These will provide helpful context when retrieving the dataset later.

If we look deeper in the fetch_california_housing source code, we can see the data was originated from https://www.dcc.fc.up.pt/~ltorgo/Regression/cal_housing.tgz.

dataset_source_url: str = 'https://www.dcc.fc.up.pt/~ltorgo/Regression/cal_housing.tgz'

dataset_source: DatasetSource = HTTPDatasetSource(url=dataset_source_url)

dataset_name: str = 'California Housing Dataset'

dataset_target: str = 'Target'

dataset_tags = {

'description': california_housing.DESCR,

}

Once the data and metadata are defined, we can convert the pandas.DataFrame into an mlflow.data.Dataset object.

dataset: PandasDataset = mlflow.data.from_pandas(

df=california_housing_df, source=dataset_source, targets=dataset_target, name=dataset_name

)

print(f'Dataset name: {dataset.name}')

print(f'Dataset digest: {dataset.digest}')

print(f'Dataset source: {dataset.source}')

print(f'Dataset schema: {dataset.schema}')

print(f'Dataset profile: {dataset.profile}')

print(f'Dataset targets: {dataset.targets}')

print(f'Dataset predictions: {dataset.predictions}')

print(dataset.df.head())

Example Output:

Dataset name: California Housing Dataset

Dataset digest: 55270605

Dataset source:

Dataset schema: ['MedInc': double (required), 'HouseAge': double (required), 'AveRooms': double (required), 'AveBedrms': double (required), 'Population': double (required), 'AveOccup': double (required), 'Latitude': double (required), 'Longitude': double (required), 'Target': double (required)]

Dataset profile: {'num_rows': 20640, 'num_elements': 185760}

Dataset targets: Target

Dataset predictions: None

MedInc HouseAge AveRooms AveBedrms Population AveOccup Latitude Longitude Target

0 8.3252 41.0 6.984127 1.023810 322.0 2.555556 37.88 -122.23 4.526

1 8.3014 21.0 6.238137 0.971880 2401.0 2.109842 37.86 -122.22 3.585

2 7.2574 52.0 8.288136 1.073446 496.0 2.802260 37.85 -122.24 3.521

3 5.6431 52.0 5.817352 1.073059 558.0 2.547945 37.85 -122.25 3.413

4 3.8462 52.0 6.281853 1.081081 565.0 2.181467 37.85 -122.25 3.422

Note that You can even convert the dataset to a dictionary to access additional properties like source_type:

for k,v in dataset.to_dict().items():

print(f"{k}: {v}")

name: California Housing Dataset

digest: 55270605

source: {"url": "https://www.dcc.fc.up.pt/~ltorgo/Regression/cal_housing.tgz"}

source_type: http

schema: {"mlflow_colspec": [{"type": "double", "name": "MedInc", "required": true}, {"type": "double", "name": "HouseAge", "required": true}, {"type": "double", "name": "AveRooms", "required": true}, {"type": "double", "name": "AveBedrms", "required": true}, {"type": "double", "name": "Population", "required": true}, {"type": "double", "name": "AveOccup", "required": true}, {"type": "double", "name": "Latitude", "required": true}, {"type": "double", "name": "Longitude", "required": true}, {"type": "double", "name": "Target", "required": true}]}

profile: {"num_rows": 20640, "num_elements": 185760}

Now that we have our dataset ready, it’s time to log it in an MLflow run. This allows us to capture the dataset’s metadata, making it part of the experiment for future reference.

with mlflow.start_run():

mlflow.log_input(dataset=dataset, context='training', tags=dataset_tags)

???? View run sassy-jay-279 at: http://127.0.0.1:8080/#/experiments/0/runs/5ef16e2e81bf40068c68ce536121538c

???? View experiment at: http://127.0.0.1:8080/#/experiments/0

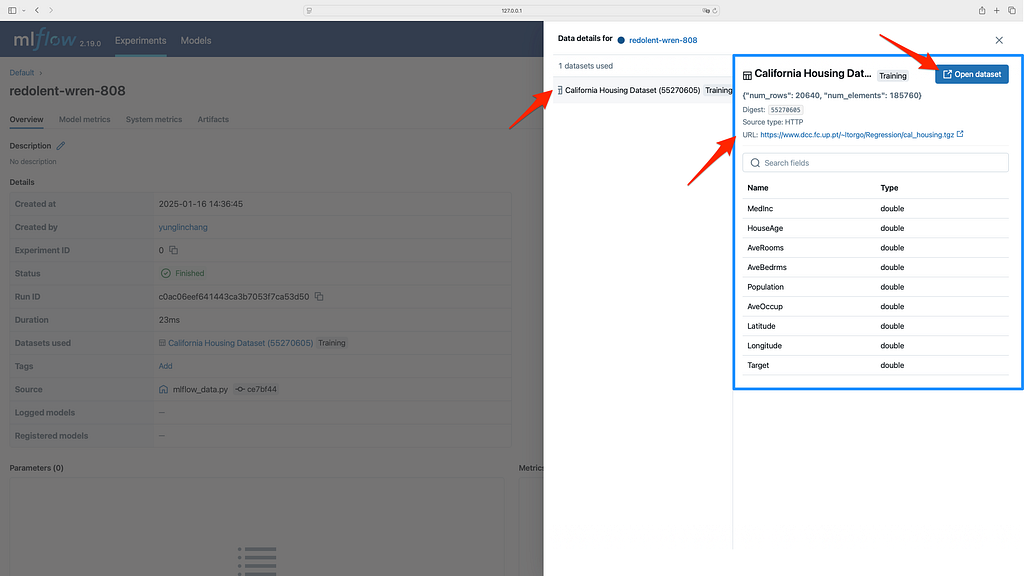

Let’s explore the dataset in the MLflow UI (). You’ll find your dataset listed under the default experiment. In the Datasets Used section, you can view the context of the dataset, which in this case is marked as being used for training. Additionally, all the relevant fields and properties of the dataset will be displayed.

Congrats! You have logged your first dataset!

Logging datasets when evaluating mlflow.evaluate() API

Let’s continue our journey by exploring how to evaluate datasets using the mlflow.evaluate() API. This functionality, which integrates datasets with MLflow’s evaluation framework, was introduced in MLflow 2.8.0. Users of earlier versions of MLflow will not have access to this feature.

Step-by-Step Guide

First, let’s perform a train-test split on the California housing data:

X_train, X_test, y_train, y_test = train_test_split(california_data, california_target, test_size=0.25, random_state=42)

For this part, we will be using similar metadata to create the training dataset but note that the training and evaluation datasets have different names.

training_dataset_name: str = 'California Housing Training Dataset'

training_dataset_target: str = 'Target'

eval_dataset_name: str = 'California Housing Evaluation Dataset'

eval_dataset_target: str = 'Target'

eval_dataset_prediction: str = 'Prediction'

For modeling, let’s fit a Random Forest Regression model.

model = RandomForestRegressor(random_state=42)

model.fit(X_train, y_train.to_numpy().flatten())

Once the model is trained, we need to prepare an evaluation dataset. The mlflow.data.from_pandas() function will be used to create this dataset, which will be passed to the mlflow.evaluate() function for model evaluation. Note that the predictions parameter is specified here to indicate the column containing the model's predicted output.

y_test_pred: pd.Series = model.predict(X=X_test)

eval_df: pd.DataFrame = X_test.copy()

eval_df[eval_dataset_target] = y_test.to_numpy().flatten()

eval_df[eval_dataset_prediction] = y_test_pred

eval_dataset: PandasDataset = mlflow.data.from_pandas(

df=eval_df, targets=eval_dataset_target, name=eval_dataset_name, predictions=eval_dataset_prediction

)

With the training and evaluation datasets prepared, it’s time to log the model and evaluate its performance using MLflow.

mlflow.sklearn.autolog()

with mlflow.start_run():

mlflow.log_input(dataset=training_dataset, context='training')

mlflow.sklearn.log_model(model, artifact_path='rf', input_example=X_test)

result = mlflow.evaluate(

data=eval_dataset,

predictions=None,

model_type='regressor',

)

print(f'metrics: {result.metrics}')

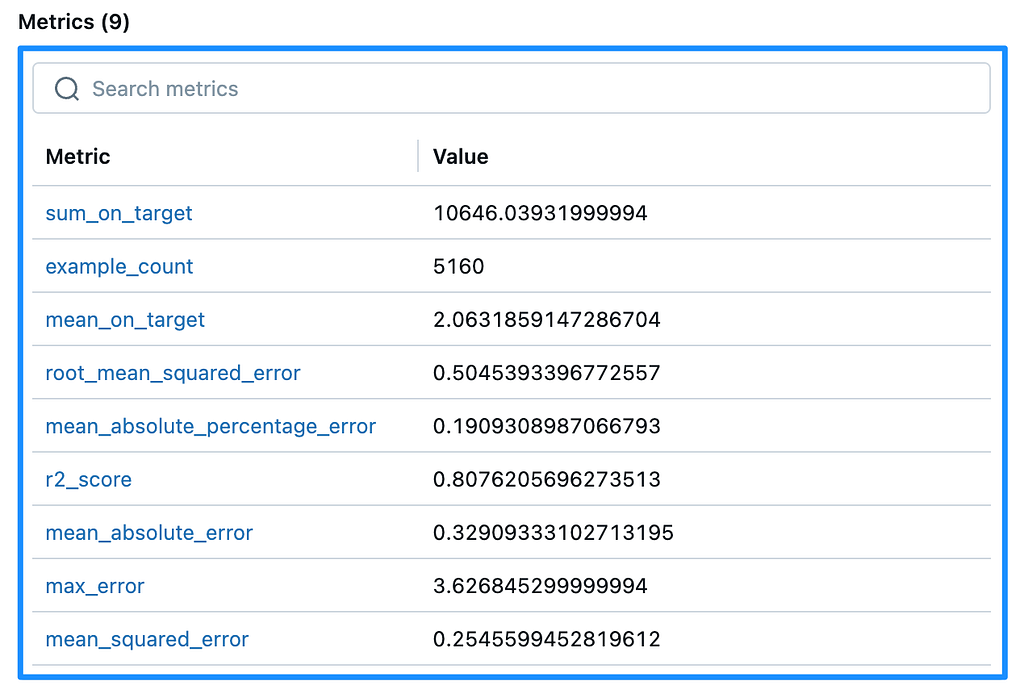

The Default Evaluator is used here, and it logs several important metrics automatically:

example_count, mean_absolute_error, mean_squared_error, root_mean_squared_error, sum_on_target, mean_on_target, r2_score, max_error, mean_absolute_percentage_error

These metrics can be found in the Metrics section of the experiment run in the MLflow UI. I recommend experimenting with different model types using mlflow.evaluate to explore the full capabilities of the Default Evaluator. It provides a range of valuable metrics as well as useful visualizations.

Note that if you’re working with an MLflow PandasDataset, you must specify the column containing the model’s predicted output using the predictions parameter in the mlflow.data.from_pandas() function. When calling mlflow.evaluate(), set predictions = None because the predictions column is already included in the dataset. This ensures proper integration and evaluation.

Example Output:

2025/01/16 15:11:36 INFO mlflow.models.evaluation.default_evaluator: Testing metrics on first row...

2025/01/16 15:11:37 INFO mlflow.models.evaluation.evaluators.shap: Shap explainer ExactExplainer is used.

metrics: {'example_count': 5160, 'mean_absolute_error': np.float64(0.32909333102713195), 'mean_squared_error': np.float64(0.2545599452819612), 'root_mean_squared_error': np.float64(0.5045393396772557), 'sum_on_target': np.float64(10646.03931999994), 'mean_on_target': np.float64(2.0631859147286704), 'r2_score': 0.8076205696273513, 'max_error': np.float64(3.626845299999994), 'mean_absolute_percentage_error': np.float64(0.1909308987066793)}

???? View run bouncy-fox-193 at: http://127.0.0.1:8080/#/experiments/0/runs/65b25856e28142fd85c54b38db4f2b3d

???? View experiment at: http://127.0.0.1:8080/#/experiments/0

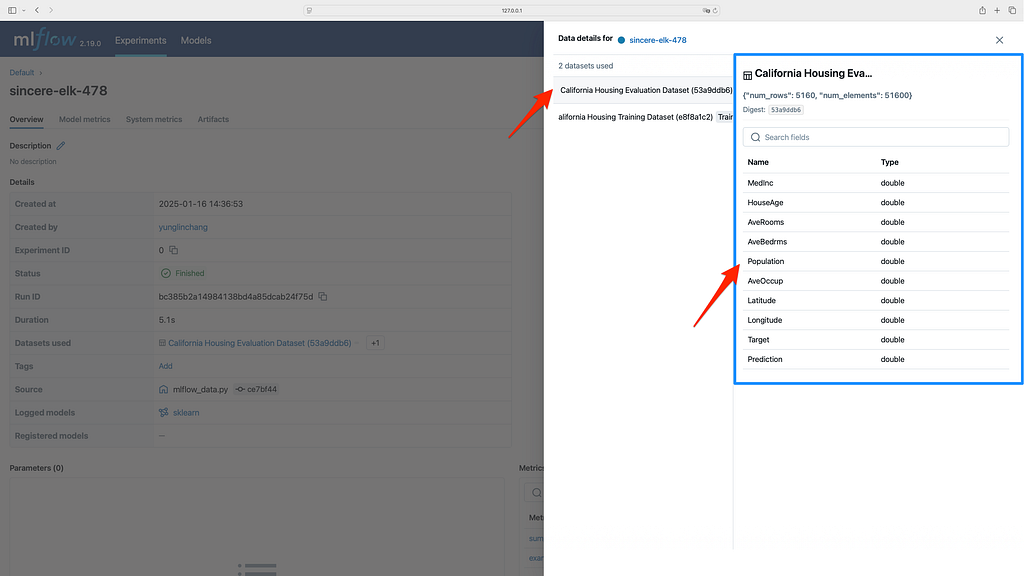

Let’s head over to the MLflow UI to view the results. You will see that our evaluation dataset has been successfully logged within the same run as the training. As a result, the run now contains both the training and evaluation datasets.

Epilogue

Logging datasets is critical not just for reproducibility but also for accountability. For instance, if you are working in a regulated industry such as healthcare or finance, it is essential to demonstrate that the data used to train a model meets certain standards and has not been altered without proper tracking. In collaborative projects, being able to share your dataset logs also facilitates more efficient collaboration and sharing of results. By logging datasets with tools like MLflow, you ensure that your experiments are transparent, reproducible, and robust, helping build trust in your machine learning outcomes.

In summary, datasets are the heart of machine learning, and logging them is fundamental to tracking, reproducibility, and transparency within any MLOps workflow. MLflow’s mlflow.data module provides the tools necessary to ensure that every step of the data journey is captured, logged, and retrievable for future use, ensuring consistency and improving the overall reliability of machine learning experiments.

Here is the Github repo for all the codes in the article!

Recap and Takeaways

- Logging datasets with mlflow.log_input() API: This is used for logging your training data, ensuring that all relevant metadata is captured for traceability and reproducibility within your experiments.

- Logging datasets when evaluating with mlflow.evaluate() API: This is used for evaluating your models and automatically logs key performance metrics, helping you track the effectiveness of your model during evaluation.

Get an email whenever Jack Chang publishes.

Feel free to share your thoughts in the comments. I love to learn about data and reflect on (write about) what I’ve learned in practical applications. If you enjoyed this article, please give it a clap to show your support. You can contact me via LinkedIn if you have more to discuss. Also, feel free to follow me on Medium for more data science articles to come!

Come play along in the data science playground!

How to Log Your Data with MLflow was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Editor-Admin

Editor-Admin