Extracting Structured Vehicle Data from Images

Build an Automated Vehicle Documentation System that Extracts Structured Information from Images, using OpenAI API, LangChain and Pydantic.Image was generated by author on PicLumenIntroductionImagine there is a camera monitoring cars at an inspection point, and your mission is to document complex vehicle details — type, license plate number, make, model and color. The task is challenging — classic computer vision methods struggle with varied patterns, while supervised deep learning requires integrating multiple specialized models, extensive labeled data, and tedious training. Recent advancements in the pre-trained Multimodal LLMs (MLLMs) field offer fast and flexible solutions, but adapting them for structured outputs requires adjustments.In this tutorial, we’ll build a vehicle documentation system that extracts essential details from vehicle images. These details will be extracted in a structured format, making it accessible for further downstream use. We’ll use OpenAI’s GPT-4 to extract the data, Pydantic to structure the outputs, and LangChain to orchestrate the pipeline. By the end, you’ll have a practical pipeline for transforming raw images into structured, actionable data.This tutorial is aimed at computer vision practitioners, data scientists, and developers who are interested in using LLMs for visual tasks. The full code is provided in an easy-to-use Colab notebook to help you follow along step-by-step.Technology StackGPT-4 Vision Model: GPT-4 is a multimodal model developed by OpenAI, capable of understanding both text and images [1]. Trained on vast amounts of multimodal data, it can generalize across a wide variety of tasks in a zero-shot manner, often without the need for fine-tuning. While the exact architecture and size of GPT-4 have not been publicly disclosed, its capabilities are among the most advanced in the field. GPT-4 is available via the OpenAI API on a paid token basis. In this tutorial, we use GPT-4 for its excellent zero-shot performance, but the code allows for easy swapping with other models based on your needs.LangChain: For building the pipeline, we will use LangChain. LangChain is a powerful framework that simplifies complex workflows, ensures consistency in the code, and makes it easy to switch between LLM models [2]. In our case, Langchain will help us to link the steps of loading images, generating prompts, invoking the GPT model, and parsing the output into structured data.Pydantic: Pydantic is a powerful library for data validation in Python [3]. We’ll use Pydantic to define the structure of the expected output from the GPT-4 model. This will help us ensure that the output is consistent and easy to work with.Dataset OverviewTo simulate data from a vehicle inspection checkpoint, we’ll use a sample of vehicle images from the ‘Car Number plate’ Kaggle dataset [4]. This dataset is available under the Apache 2.0 License. You can view the images below:Vehicle images from Car Number plate’ Kaggle datasetLets Code!Before diving into the practical implementation, we need to take care of some preparations:Generate an OpenAI API key— The OpenAI API is a paid service. To use the API, you need to sign up for an OpenAI account and generate a secret API key linked to the paid plan (learn more).Configure your OpenAI — In Colab, you can securely store your API key as an environment variables (secret), found on the left sidebar (????). Create a secret named OPENAI_API_KEY, paste your API key into the value field, and toggle ‘Notebook access’ on.Install and import the required libraries.Pipeline ArchitectureIn this implementation we will use LangChain’s chain abstraction to link together a sequence of steps in the pipeline. Our pipeline chain is composed of 4 components: an image loading component, a prompt generation component, an MLLM invoking component and a parser component to parse the LLM’s output into structured format. The inputs and outputs for each step in a chain are typically structured as dictionaries, where the keys represent the parameter names, and the values are the actual data. Let’s see how it works.Image Loading ComponentThe first step in the chain is loading the image and converting it into base64 encoding, since GPT-4 requires the image be in a text-based (base64) format.def image_encoding(inputs): """Load and Convert image to base64 encoding""" with open(inputs["image_path"], "rb") as image_file: image_base64 = base64.b64encode(image_file.read()).decode("utf-8") return {"image": image_base64}The inputs parameter is a dictionary containing the image path, and the output is a dictionary containing the based64-encoded image.Define the output structure with PydanticWe begin by specifying the required output structure using a class named Vehicle which inherits from Pydantic’s BaseModel. Each field (e.g., Type, Licence, Make, Model, Color) is defined using Field, which allows us to:Specify the output data type (e.g., str, int, list, etc.).Provide a descript

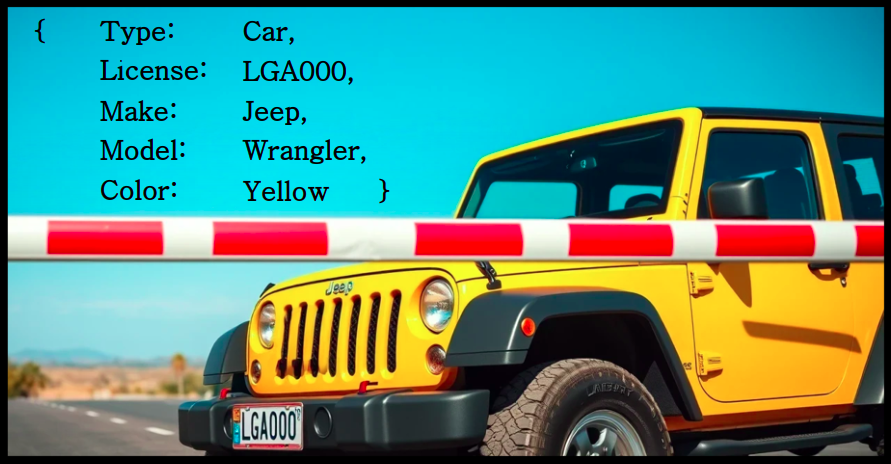

Build an Automated Vehicle Documentation System that Extracts Structured Information from Images, using OpenAI API, LangChain and Pydantic.

Introduction

Imagine there is a camera monitoring cars at an inspection point, and your mission is to document complex vehicle details — type, license plate number, make, model and color. The task is challenging — classic computer vision methods struggle with varied patterns, while supervised deep learning requires integrating multiple specialized models, extensive labeled data, and tedious training. Recent advancements in the pre-trained Multimodal LLMs (MLLMs) field offer fast and flexible solutions, but adapting them for structured outputs requires adjustments.

In this tutorial, we’ll build a vehicle documentation system that extracts essential details from vehicle images. These details will be extracted in a structured format, making it accessible for further downstream use. We’ll use OpenAI’s GPT-4 to extract the data, Pydantic to structure the outputs, and LangChain to orchestrate the pipeline. By the end, you’ll have a practical pipeline for transforming raw images into structured, actionable data.

This tutorial is aimed at computer vision practitioners, data scientists, and developers who are interested in using LLMs for visual tasks. The full code is provided in an easy-to-use Colab notebook to help you follow along step-by-step.

Technology Stack

- GPT-4 Vision Model: GPT-4 is a multimodal model developed by OpenAI, capable of understanding both text and images [1]. Trained on vast amounts of multimodal data, it can generalize across a wide variety of tasks in a zero-shot manner, often without the need for fine-tuning. While the exact architecture and size of GPT-4 have not been publicly disclosed, its capabilities are among the most advanced in the field. GPT-4 is available via the OpenAI API on a paid token basis. In this tutorial, we use GPT-4 for its excellent zero-shot performance, but the code allows for easy swapping with other models based on your needs.

- LangChain: For building the pipeline, we will use LangChain. LangChain is a powerful framework that simplifies complex workflows, ensures consistency in the code, and makes it easy to switch between LLM models [2]. In our case, Langchain will help us to link the steps of loading images, generating prompts, invoking the GPT model, and parsing the output into structured data.

- Pydantic: Pydantic is a powerful library for data validation in Python [3]. We’ll use Pydantic to define the structure of the expected output from the GPT-4 model. This will help us ensure that the output is consistent and easy to work with.

Dataset Overview

To simulate data from a vehicle inspection checkpoint, we’ll use a sample of vehicle images from the ‘Car Number plate’ Kaggle dataset [4]. This dataset is available under the Apache 2.0 License. You can view the images below:

Lets Code!

Before diving into the practical implementation, we need to take care of some preparations:

- Generate an OpenAI API key— The OpenAI API is a paid service. To use the API, you need to sign up for an OpenAI account and generate a secret API key linked to the paid plan (learn more).

- Configure your OpenAI — In Colab, you can securely store your API key as an environment variables (secret), found on the left sidebar (????). Create a secret named OPENAI_API_KEY, paste your API key into the value field, and toggle ‘Notebook access’ on.

- Install and import the required libraries.

Pipeline Architecture

In this implementation we will use LangChain’s chain abstraction to link together a sequence of steps in the pipeline. Our pipeline chain is composed of 4 components: an image loading component, a prompt generation component, an MLLM invoking component and a parser component to parse the LLM’s output into structured format. The inputs and outputs for each step in a chain are typically structured as dictionaries, where the keys represent the parameter names, and the values are the actual data. Let’s see how it works.

Image Loading Component

The first step in the chain is loading the image and converting it into base64 encoding, since GPT-4 requires the image be in a text-based (base64) format.

def image_encoding(inputs):

"""Load and Convert image to base64 encoding"""

with open(inputs["image_path"], "rb") as image_file:

image_base64 = base64.b64encode(image_file.read()).decode("utf-8")

return {"image": image_base64}

The inputs parameter is a dictionary containing the image path, and the output is a dictionary containing the based64-encoded image.

Define the output structure with Pydantic

We begin by specifying the required output structure using a class named Vehicle which inherits from Pydantic’s BaseModel. Each field (e.g., Type, Licence, Make, Model, Color) is defined using Field, which allows us to:

- Specify the output data type (e.g., str, int, list, etc.).

- Provide a description of the field for the LLM.

- Include examples to guide the LLM.

The ... (ellipsis) in each Field indicates that the field is required and cannot be omitted.

Here’s how the class looks:

class Vehicle(BaseModel):

Type: str = Field(

...,

examples=["Car", "Truck", "Motorcycle", 'Bus'],

description="Return the type of the vehicle.",

)

License: str = Field(

...,

description="Return the license plate number of the vehicle.",

)

Make: str = Field(

...,

examples=["Toyota", "Honda", "Ford", "Suzuki"],

description="Return the Make of the vehicle.",

)

Model: str = Field(

...,

examples=["Corolla", "Civic", "F-150"],

description="Return the Model of the vehicle.",

)

Color: str = Field(

...,

example=["Red", "Blue", "Black", "White"],

description="Return the color of the vehicle.",

)

Parser Component

To make sure the LLM output matches our expected format, we use the JsonOutputParser initialized with the Vehicle class. This parser validates that the output follows the structure we’ve defined, verifying the fields, types, and constrains. If the output does not match the expected format, the parser will raise a validation error.

The parser.get_format_instructions() method generates a string of instructions based on the schema from the Vehicle class. These instructions will be part of the prompt and will guide the model on how to structure its output so it can be parsed. You can view the instructions variable content in the Colab notebook.

parser = JsonOutputParser(pydantic_object=Vehicle)

instructions = parser.get_format_instructions()

Prompt Generation component

The next component in our pipeline is constructing the prompt. The prompt is composed of a system prompt and a human prompt:

- System prompt: Defined in the SystemMessage , and we use it to establish the AI’s role.

- Human prompt: Defined in the HumanMessage and consists of 3 parts: 1) Task description 2) Format instructions which we pulled from the parser, and 3) the image in a base64 format, and the image quality detail parameter.

The detail parameter controls how the model processes the image and generates its textual understanding [5]. It has three options: low, high or auto :

- low: The model processes a low resolution (512 x 512 px) version of the image, and represents the image with a budget of 85 tokens. This allows the API to return faster responses and consume fewer input tokens.

- high : The model first analyses a low resolution image (85 tokens) and then creates detailed crops using 170 tokens per 512 x 512px tile.

- auto : The default setting, where the low or high setting is automatically chosen by the image size.

For our setup, low resolution is sufficient, but other application may benefit from high resolution option.

Here’s the implementation for the prompt creation step:

@chain

def prompt(inputs):

"""Create the prompt"""

prompt = [

SystemMessage(content="""You are an AI assistant whose job is to inspect an image and provide the desired information from the image. If the desired field is not clear or not well detected, return none for this field. Do not try to guess."""),

HumanMessage(

content=[

{"type": "text", "text": """Examine the main vehicle type, make, model, license plate number and color."""},

{"type": "text", "text": instructions},

{"type": "image_url", "image_url": {"url": f"data:image/jpeg;base64,{inputs['image']}", "detail": "low", }}]

)

]

return prompt

The @chain decorator is used to indicate that this function is part of a LangChain pipeline, where the results of this function can be passed to the step in the workflow.

MLLM Component

The next step in the pipeline is invoking the MLLM to produce the information from the image, using the MLLM_response function.

First we initialize a multimodal GTP-4 model with ChatOpenAI, with the following configurations:

- model specifies the exact version of the GPT-4 model.

- temperature set to 0.0 to ensure a deterministic response.

- max_token Limits the maximum length of the output to 1024 tokens.

Next, we invoke the GPT-4 model using model.invoke with the assembled inputs, which include the image and prompt. The model processes the inputs and returns the information from the image.

Constructing the pipeline Chain

After all of the components are defined, we connect them with the | operator to construct the pipeline chain. This operator sequentially links the outputs of one step to the inputs of the next, creating a smooth workflow.

Inference on a Single Image

Now comes the fun part! We can extract information from a vehicle image by passing a dictionary containing the image path, to the pipeline.invoke method. Here’s how it works:

output = pipeline.invoke({"image_path": f"{img_path}"})The output is a dictionary with the vehicle details:

For further integration with databases or API responses, we can easily convert the output dictionary to JSON:

json_output = json.dumps(output)

Inference on a Batch of Images

LangChain simplifies batch inference by allowing you to process multiple images simultaneously. To do this, you should pass a list of dictionaries containing image paths, and invoking the pipeline using pipeline.batch:

# Prepare a list of dictionaries with image paths:

batch_input = [{"image_path": path} for path in image_paths]

# Perform batch inference:

output = pipeline.batch(batch_input)

The resulting output dictionary can be easily converted into tabular data, such as a Pandas DataFrame:

df = pd.DataFrame(output)

As we can see, the GPT-4 model correctly identified the vehicle type, licence plate, make, model and color, providing accurate and structured information. Where the details were not clearly visible, as in the motorcycle image, it returned ‘None’ as instructed in the prompt.

Concluding Remarks

In this tutorial we learned how to extract structured data from images and used it to build a vehicle documentation system. The same principles can be adapted to a wide range of other applications as well. We utilized the GPT-4 model, which showed strong performance in identifying vehicle details. However, our LangChain based implementation is flexible, allowing for easy integration with other MLLM models. While we achieved good results, it is important to remain mindful of potential allocations, which can be arise with LLM based models.

Practitioners should also consider potential privacy and safety risks when implementing similar systems. Though data in the OpenAI API platform is not used to train models by default [6], handling sensitive data requires adherence to proper regulations.

Full Code as Colab notebook:

https://medium.com/media/9060d984c987f9b0ddfb17e929d87c51/hrefThank you for reading!

Congratulations on making it all the way here. Click ????x50 to show your appreciation and raise the algorithm self esteem ????

Want to learn more?

- Explore additional articles I’ve written

- Subscribe to get notified when I publish articles

- Follow me on Linkedin

References

[1] GPT-4 Technical Report [link]

[2] LangChain [link]

[3] PyDantic [link]

[4] ‘Car Number plate’ Kaggle dataset [link]

[5] OpenAI — Low or high fidelity image understanding [link]

[6] Enterprise privacy at OpenAI [link]

Extracting Structured Vehicle Data from Images was originally published in Towards Data Science on Medium, where people are continuing the conversation by highlighting and responding to this story.

Editor-Admin

Editor-Admin