Hyperparameter Optimization with GPyOpt: A Bayesian Approach

Hyperparameter tuning is an essential step in building high-performing machine learning models. Selecting the right hyperparameters can mean distinguishing between a mediocre model and one that provides actionable insights or robust predictions.

Introduction

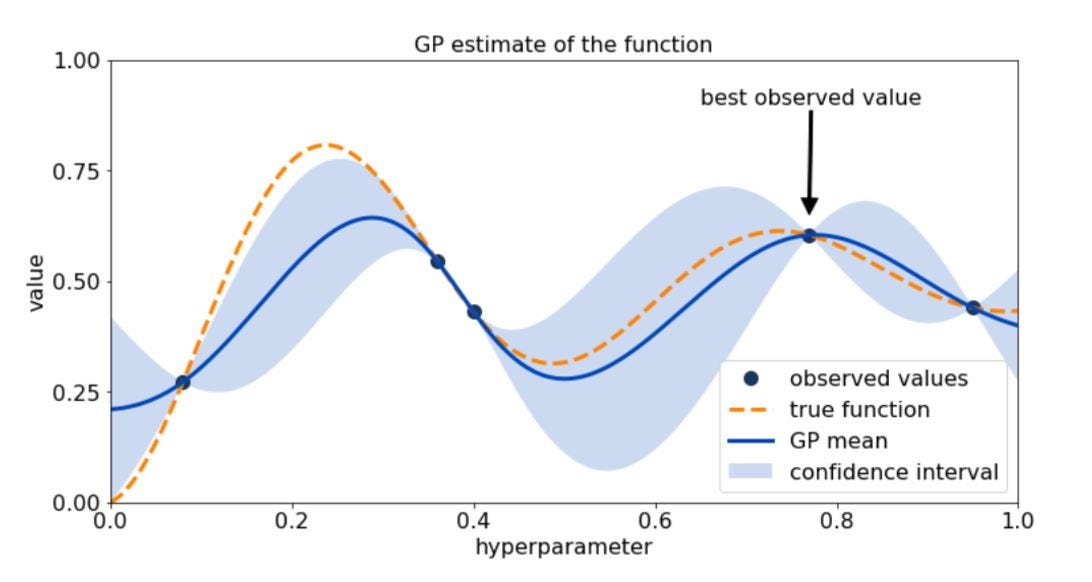

Hyperparameter tuning is an essential step in building high-performing machine learning models. Selecting the right hyperparameters can mean distinguishing between a mediocre model and one that provides actionable insights or robust predictions. However, the process is often computationally expensive and time-consuming. Enter Bayesian Optimization, a powerful framework streamlining this process by intelligently exploring the hyperparameter space.

In this post, we’ll explore how I used GPyOpt, a Python library for Bayesian Optimization, to efficiently tune a neural network’s hyperparameters. This blog explains Gaussian Processes, Bayesian Optimization, and how they work together. We’ll also dive into the specifics of the model, the hyperparameters tuned, and the results of the optimization.

Editor-Admin

Editor-Admin